You can now stream Unreal Engine content to Meta Quest 2, Meta Quest 3, and Apple Vision Pro if you use the latest version of the Pixel Streaming Infrastructure and you build the Unreal Engine from source using this commit from ue5-main (coming soon to UE 5.5!). Detailed instructions here.

Contents

- A brief timeline of Pixel Streaming VR

- Problem

- Solutions

- Putting it all together

- Future Work

- Special thanks

- Instructions for testing

A brief timeline of Pixel Streaming VR

- (2022) TensorWorks present the “State of Pixel Streaming” at Unreal Fest and hear many feature requests for VR support.

presentation.](/assets/images/blog/vr-pixelstreaming-support-for-avp-and-mq3/state-of-ps.jpg)

- (2023) TensorWorks (credit: William Belcher) implements experimental support for VR Pixel Streaming into Unreal Engine 5.2.

Release notes + UE Docs (Video credit: Michael Wallace)

- (2024) There are rendering issues impacting users across all headsets, including the newly launched Apple Vision Pro.

](/assets/images/blog/vr-pixelstreaming-support-for-avp-and-mq3/xr-not-converged.png)

Problem

It was clear Pixel Streaming users were very interested in the VR streaming feature (see this GH issue); however, it was still too experimental and producing incorrect results.

But what specifically needed improving?

- Stereo imagery was not converging (GH issue). In the headset each eye would see an image that was not correctly aligned to produce binocular vision (i.e. it looked like double vision).

- VR controller inputs were sometimes getting stuck. VR controller inputs, particularly the grip and trigger axes would intermittently get stuck down even when they were released.

- VR controller axes were binary instead of analog. The trigger and grip axes were either fully pressed or released, despite being capable of reporting values in between.

- Pixel Streaming VR was not optimally configured for VR streaming. VR Pixel Streaming would stream at 60 FPS and would transmit a key frame every 300 frames by default. This is not ideal for VR, where HMD’s much prefer a smooth 90 FPS.

- Perspective would warp on some headsets. When moving your head around the perspective of the scene would appear to warp on some headsets (the image below illustrates the sort of warping that was presenting).

Solutions

TensorWorks devised the following solutions to each of the above problems:

Solution 1: Converged stereo imagery

The reason stereo imagery was not converged in the user’s headset was that the initial experimental implementation of Pixel Streaming VR was done using hard-coded values for the stereo rendering. This was quick to implement for the experiment; however, the drawback is that as headsets with different stereo rendering configurations were released these hard-coded defaults would be varying levels of incorrect.

For instance, the rendering looked slightly wrong on the Meta Quest 2, but markedly wrong on the Meta Quest 3. Of course, it is unreasonable to hard-code different configurations for each headset. Instead, we needed a way to dynamically configure the stereo rendering in Pixel Streaming VR based on the headset being used.

The solution was to create a new Pixel Streaming message that contained the headset configuration as reported by the WebXR API. To configure the stereo rendering on the Unreal Engine side we determined we would need to construct a perspective projection matrix for each eye and configure the transform (the position and orientation) of each rendered left/right to match the respective headset eye transform. By transmitting such information from the browser to Unreal Engine, we would naturally account for any device configurations like interpupillary distance (distance between the eyes), canted displays, field of views, and aspect ratios - even as new headsets were released!

Thus, we introduced a new message to Pixel Streaming VR, XREyeViews, which contains:

this.webRtcController.streamMessageController.toStreamerHandlers.get('XREyeViews')([

// Left eye 4x4 transform matrix

leftEyeTrans[0], leftEyeTrans[4], leftEyeTrans[8], leftEyeTrans[12],

leftEyeTrans[1], leftEyeTrans[5], leftEyeTrans[9], leftEyeTrans[13],

leftEyeTrans[2], leftEyeTrans[6], leftEyeTrans[10], leftEyeTrans[14],

leftEyeTrans[3], leftEyeTrans[7], leftEyeTrans[11], leftEyeTrans[15],

// Left eye 4x4 projection matrix

leftEyeProj[0], leftEyeProj[4], leftEyeProj[8], leftEyeProj[12],

leftEyeProj[1], leftEyeProj[5], leftEyeProj[9], leftEyeProj[13],

leftEyeProj[2], leftEyeProj[6], leftEyeProj[10], leftEyeProj[14],

leftEyeProj[3], leftEyeProj[7], leftEyeProj[11], leftEyeProj[15],

// Right eye 4x4 transform matrix

rightEyeTrans[0], rightEyeTrans[4], rightEyeTrans[8], rightEyeTrans[12],

rightEyeTrans[1], rightEyeTrans[5], rightEyeTrans[9], rightEyeTrans[13],

rightEyeTrans[2], rightEyeTrans[6], rightEyeTrans[10], rightEyeTrans[14],

rightEyeTrans[3], rightEyeTrans[7], rightEyeTrans[11], rightEyeTrans[15],

// right eye 4x4 projection matrix

rightEyeProj[0], rightEyeProj[4], rightEyeProj[8], rightEyeProj[12],

rightEyeProj[1], rightEyeProj[5], rightEyeProj[9], rightEyeProj[13],

rightEyeProj[2], rightEyeProj[6], rightEyeProj[10], rightEyeProj[14],

rightEyeProj[3], rightEyeProj[7], rightEyeProj[11], rightEyeProj[15],

// HMD 4x4 transform

hmdTrans[0], hmdTrans[4], hmdTrans[8], hmdTrans[12],

hmdTrans[1], hmdTrans[5], hmdTrans[9], hmdTrans[13],

hmdTrans[2], hmdTrans[6], hmdTrans[10], hmdTrans[14],

hmdTrans[3], hmdTrans[7], hmdTrans[11], hmdTrans[15],

]);

Note:

This is a relatively large message, so we only send it on initial connection or whenever it changes. The rest of the time we simply send the HMD transform.

Once this message is received by Unreal Engine we then extract the horizontal and vertical field of views, the off-center projection offsets for each projection matrix (i.e. the vertical field of view may be constructed from non-symmetrical up and down angles), the interpupillary distance, the eye positions and orientations, the target aspect ratio, and the HMD transform. This steps requires some special handling as WebXR and Unreal Engine do not share the same coordinate system.

Solution 2: VR controller inputs were sometimes getting stuck.

After investigating in the Pixel Streaming plugin, we found the culprit to be a combination of:

- If a controller axes was genuinely pressed by the user; and

- During a tick of Unreal Engine’s input processing there was no new controller pressed message from the browser then UE would assume the controller had been released; then

- New input would come through from the browser, but the controller was already released and these presses were marked as repeats.

The result of this would be “Schrödinger’s button press”, with the button seemingly being reported to be both pressed and released at the same time, this conflict would intermittently result in the axis getting stuck on a specific value.

The fix was to keep informing UE that the controller is pressed, even if there is no new message, until we get a release message. This would normally not be a problem in a desktop UE application; however, due to network latency and timing, weird input orderings need to have special handling.

The actual changes can be found in this commit.

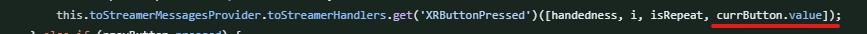

Solution 3: VR controller axes were binary instead of analog

This issue was most apparent with the VR hands in the UE VR template. If you ran this template using OpenXR you could open and close the hand smoothly using the triggers. However, in Pixel Streaming VR the hands would snap to fully open or fully closed.

The solution was a simple one, we just needed to extend our button pressed message to contain a value for the button axis. Once this was transmitted and processed in the Pixel Streaming plugin the hands worked as expected.

Solution 4: Pixel Streaming VR was not optimally configured for VR streaming

As mentioned earlier, modern VR expects 90 FPS with very little hitching. Pixel Streaming VR was simply using the default Pixel Streaming settings of a maximum 60 FPS WebRTC stream.

This was trivially solved by making the maximum stream FPS for VR Pixel Streaming 90 FPS.

Additionally, Pixel Streaming was sending key frames on an interval, this was also disabled for VR streaming, so key frames are only sent as requested. This increases the consistency of data throughput, resulting in fewer hitches on the browser side.

Note:

If you are on Unreal Engine versions prior to 5.4.2 and want to try this, you can simply pass the following launch arguments: -PixelStreamingWebRTCFPS=90 -PixelStreamingEncoderKeyframeInterval=0

Solution 5: Perspective would warp on some headsets

After applying the XREyeViews message above and shipping that feature in UE 5.4.2 it was noticed that on some headsets the perspective would warp. Specifically, the rendering would be normal on the Meta Quest 2 but distorted and warped on the Meta Quest 3.

This issue was quite difficult to diagnose; however, after carefully comparing the projection matrices sent by the Meta Quest 2 and Meta Quest 3 it was noticed the projection matrix’s vertical off-center projection was not symmetrical on the Meta Quest 3 but was symmetrical on the Meta Quest 2. Once this was discovered we were able to find that the sign of these values was incorrectly set, and once flipped the warping and distortion was resolved.

Warped vertical perspective rendering:

CurLeftEyeProjOffsetX = -CurLeftEyeProjMatrix.M[0][2];

CurLeftEyeProjOffsetY = CurLeftEyeProjMatrix.M[1][2];

CurRightEyeProjOffsetX = -CurRightEyeProjMatrix.M[0][2];

CurRightEyeProjOffsetY = CurRightEyeProjMatrix.M[1][2];

Normal vertical perspective rendering:

CurLeftEyeProjOffsetX = -CurLeftEyeProjMatrix.M[0][2];

CurLeftEyeProjOffsetY = -CurLeftEyeProjMatrix.M[1][2];

CurRightEyeProjOffsetX = -CurRightEyeProjMatrix.M[0][2];

CurRightEyeProjOffsetY = -CurRightEyeProjMatrix.M[1][2];

Note:

The difference is in the negative signs between warped and non-warped.

Putting it all together

Working Pixel Streaming VR on Meta Quest 3 and Apple Vision Pro!

)](/assets/images/blog/vr-pixelstreaming-support-for-avp-and-mq3/ps-xr-avp-mq3.gif)

Future Work

While this work paves the way for a much more robust Pixel Streaming VR solution that dynamically handles different headsets, there are a number of future work items to bring VR Pixel Streaming to a production ready state:

- Add support for hand tracking.

- Add motion prediction to compensate for network latency.

- Fix stuttering issue that occurs when moving your head around (curiously only present in HMD’s immersive VR mode, but not the HMD’s browser).

Special thanks

- All the TensorWorks team, especially William Belcher and Aidan Possemiers for insights while debugging and speculating.

- Alex Coulombe for testing, feedback, and videos.

- Tobias Chen for testing and feedback.

- Amanda Watson for suggestions and insights.

Instructions for testing

To test the advances discussed in this article, follow these instructions:

Pixel Streaming Infrastructure

- Pull the latest Pixel Streaming Infrastructure from the

masterbranch. - Make a self-signed certificate by following this guide.

- In command prompt run

SignallingWebServer/platform_scripts/cmd/start.bat --https - Visit https://localhost on your PC to confirm you have this part all set up (note: https).

- You should see the Pixel Streaming web page after you click the proceed to unsafe page warning.

Unreal Engine

- Pull this commit from the

ue5-mainbranch. - Build the Unreal Editor from source (sorry this will take a while).

- Make a VR project.

- Enable Pixel Streaming plugin.

- Disable OpenXR plugin and delete the asset guideline as per the docs.

- Launch your built project with

-pixelstreamingurl=ws://127.0.0.1:8888 -PixelStreamingEnableHMD=true(and have the signalling server still running).

Headset

- Run

ipconfigto find the local ip address of your machine that is running UE and the signalling server. - In the headset browser visit: https://xxx.xxx.xx.xx <- your IP here (note: https).

- Click the start streaming button.

- Click the enter VR button.

Note:

This won’t work unless you do a source build of engine, so you really can’t skip that step by using a launcher build or already built version of UE.